(Note: another thing I wrote a while ago)

The book ‘producing open source software’ by Karl Fogel has a wonderful cover (pictured). It shows many smaller arrows of varying sizes all pointing to the right, and a larger arrow in yellow that it seems to me is the implied effect. If you have all your horses pulling in one direction, you can move a house it seems to say.

The image comes to me like that because it reminds me a lot of various diagrams you would make in physics class. If the ball hits the other ball at such and such an angle, where does the shoe drop? That kind of thing.

It’s a beautiful image, and it’s the one I had of Free/Open software before I got involved in it. All these people pulling in the same direction to make an encyclopaedia, or an operating system. But I tell you, it’s very wrong until you hit maturity.

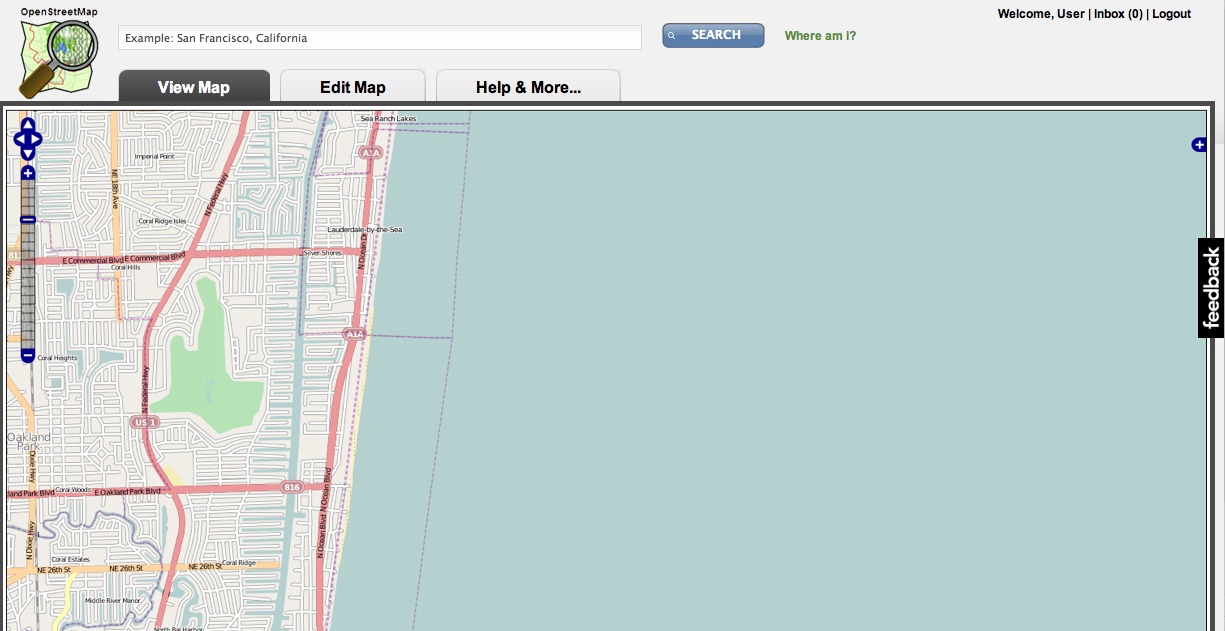

First, the size of the arrows. The distribution of effort in collaborative projects can vary an awful lot. When a project starts its effort is often distributed like this:

Stage 1: One man band Effort / Frequency graph

What the hell does that mean? It means that one person is putting a lot of effort in. It’s low on the frequency scale (there’s only one person). It’s high on the effort because they’re doing all the coding, making the website work, replying to email, battling rival projects with dumb ideas, coming up with better ideas and so on. It’s the most critical phase of a projects life and it’s why so many projects are synonymous with the people who started them. Or at least the myth of who started them as the successful projects attract people willing to claim credit for things that went right. Linux, Linus. Wikipedia, Jimbo Wales. Mono.NET, Miguel de Icaza. Debian, Ian Murdock.

There are thousands of smaller projects still effectively run by the guys who started them. File utilities, mouse drivers, deployment tools, image tools and so on. When they get big in many cases they retain their founders because if they don’t, they tend to fuck up. Committees are not good at running large distributed projects which rely on peoples free(!) time. Benevolent dictators tend to work out well, and when they don’t the project forks or fails and a hundred other flowers bloom.

Think about Debian vs. Ubuntu in this context. And if you have no idea what that means, it’s not important.

The next stage for these projects hinges a lot on the temperament of the guy running it. Often they won’t accept new ideas, or they’re only doing it for publicity (which often behind the scene means money). Or they get bored, or they want to control everything, or they don’t have the time. There are millions of reasons. When OSM started there were two existing similar projects I knew of. One was geowiki.com started by Richard Fairhurst and friends. It was (and is) a beautiful site, arguably required a lot of effort to make and didn’t have any community participation (mailing lists and so on). It stagnated because nobody could get involved and all the data, code and tools were locked up.

Second, there is free-map.org.uk which is run to this day by Nick Whitelegg. It is very focused on the walker. Here in England we have a large community of people who walk all over the place on rights of way (which often cross private land). Free-map catered to those users and Nick throws a lot of effort at it, but it remains mostly Nick because of its focus. Nick has thousands of great ideas but only Nick to implement them. In his spare time.

When OSM started it was light years behind these projects but like them it essentially rejected the geo-dogma. Today, Nick and Richard are key contributors to OSM.

After the first ever OSM talk, freemap was set up. It was very top down, using all the latest standards and it got basically nowhere despite good intentions because it concentrated on technology, not community.

- Community is everything. OSM only ever sought to make maps. Not the prettiest maps. Not the best maps. Not the fastest maps. Not even maps that worked in 3 out of 24 hours. What it did (and does) is make map making as simple as possible both technically and socially.

- The second corner stone was getting out of the way. All the code was open. All the data was open. It was as open as you could possibly make it, barring privacy constraints.

These two things were the key, and what separated OSM from the other projects. They’re kind of fairly easy to posit and talk about and wave around. The third pillar is not.

- Only let the people doing actual work talk. Everyone else can bugger off.

OSM for a good two years was full of people with brilliant designs for space ships. I love space ships. I love flying cars. I am still disappointed every time I see a DeLorean that it doesn’t vertically take off. But a space ship requires highly paid experts from NASA to come build it. And technicians to make sure it’s ready to fly. And a ground crew in case there’s a problem. These are all wonderful things, but rarely do you get them for free in someone’s spare time.

OSM on the other hand was Buzz Lightyear’s cardboard spaceship. It looked like a space ship. If you threw it and squinted for a few seconds before turning away then it kind of flew. It was held together with string and glue. It was a dog. But, crucially, it worked. Anyone could contribute to it, anyone could have the data. It was the only project with a simple API that anyone could talk to.

Continually there were calls to strap jet engines on (this was a mooted feature for the Space Shuttle by the way) or inter-galactic warp drive. Mostly these people were told to bugger off unless they designed, built and strapped on the rockets.

Why such a harsh attitude? Because building something like OSM is hard. It requires love and time and attention over long periods of time. Therefore nerves can fray when a space cadet lands next to your cardboard ship and tells you its all wrong because they read it in a book somewhere, and it says so there. If you have 3 space cadets arguing 3 different warp drive designs, make a sail and tell them all to bugger off.

So back to the arrows.

At this stage, a project still has a main leader but it has a bunch of users and the odd person adding significant amounts of code. OSM was slightly strange in this regard as the users are in a sense much more important than coders. They’re out there in the rain on a Sunday, for free, mapping some awful housing estate. Without them out there, you have no map. Therefore the simplest thing that could work is OK, so long as you have people out there mapping.

Stage 2: Lumpy Effort / Frequency graph

And so we arrive at stage 2. The founder remains on the left, being one person throwing a lot of time at it. Next there is a lump of a few people doing key things. In OSMs case this was a guy called Imi who made an amazing map editor called JOSM, a guy called Andy and one called 80n (yes, really) who did a lot of mapping and started to put together OSMs ontology system. It doesn’t have an ontology but it’ll be our metaphor for now. Further up the frequency scale there are many people out doing small things. Mapping some streets. Fixing the odd bug. Publicising the project.

Notice that the area of each blob on the graph represents, roughly, the amount of work going in per unit time. Notice that the two right-hand blobs are larger in area than the one on the left. They are, in total, putting more work in.

There is a downside – the work is lumpy on the right. Someone maps an entire town and then disappears. Someone writes an entire editing suite and then gets a girlfriend. So the bars are shifting in all directions as time goes on. Who knows what the project will look like next month?

Stage 3: Effort / Frequency graph smoothes out to a curve

Eventually the distribution is large enough because it has so many people, that it smoothes out.

While not mathematically correct, this graph is often called the long tail. The effort of a few full time individuals on the left is equal to thousands of contributors spending a few minutes a day. OSM isn’t there yet, but it’s getting close. That leads to a specific set of arrow lengths, many short ones and a few bigger ones.

What does this say about direction, too? Revisiting our stages, I think the first two look something like this:

Yes, some of the lines are pointing backward.

So in stage one, you have your one founder. Stage two attracts people who think the whole thing is crazy, or start rival projects – hence they’re pointing the other way. You have people with different focuses, different ideas and so these are at an angle. Different sizes as they’re throwing varying sizes of effort in.

Last, as the graph smoothes out and the ideas and technologies are accepted you’ll see something much more like the cover of that book.